The excitement around AI (whatever it means lately) is undeniable. From optimizing supply chains to personalizing customer experiences, the potential for AI to drive business value is constantly in the spotlight.

However, amid the hype, a crucial conversation is missing: the true cost of AI.

It’s not just about the initial software and talent expenses; it’s about the significant, often invisible, financial and environmental burdens that come with AI adoption.

The Environmental Footprint of AI

We often think of digital technologies as “clean” because they don’t produce physical waste. This couldn’t be further from the truth for AI. Training a single large AI model, such as a LLM or a complex image recognition system, consumes an immense amount of energy. This is because these models require powerful, energy-hungry GPUs to perform the billions of calculations needed for learning.

The Carbon Cost

The energy demands of AI are staggering, and since much of the world’s electricity is still generated from fossil fuels, this translates directly to a substantial carbon footprint.

- Training a single LLM can generate emissions equivalent to five cars over their entire lifetimes. One study estimated the training of a single complex model to produce over 552 tons of carbon dioxide (CO2). This is roughly the same amount of CO_2 produced by 300 round-trip flights between New York and San Francisco.

- A single ChatGPT query is estimated to consume five times more electricity than a simple Google search. When multiplied by billions of users, the cumulative impact is immense.

The Water Footprint

Data centers, the physical infrastructure that houses AI, require vast amounts of water for cooling. This is a hidden cost that can strain local water supplies, particularly in water-scarce regions.

- A medium-sized data center can consume up to 25.5 million liters of water annually just for cooling — equal to the daily water consumption of approximately 300,000 people.

- Companies are already seeing the impact. Microsoft’s global water consumption increased by 34% in a single year, reaching 6.4 million cubic meters, largely attributed to the demands of its data centers and AI operations. Similarly, Google’s water use rose by 20% in the same period, reaching over 19.5 million cubic meters.

- Some researchers estimate that a short conversation of 10–50 queries with an AI model can consume around 500 milliliters of water, the equivalent of a small bottle.

These figures demonstrate that the promise of a digital, “clean” AI is a myth. The environmental impact is real, and it’s a critical factor that executives must consider before making large-scale AI investments.

The Financial Costs You’re Not Being Told About

Beyond the environmental impact, the financial implications of AI are often underestimated. The initial investment is just the tip of the iceberg; the operational costs are what can truly shock an unprepared organization.

Training and Infrastructure Costs

The upfront cost of training a state-of-the-art AI model is immense. This is not just a one-time fee; it’s an ongoing capital expenditure for the most advanced models.

- Model Training: The cost of training a single LLM like OpenAI’s GPT-4 is estimated to have exceeded $100 million. Google’s Gemini Ultra model is estimated to have cost up to $191 million to train.

- Data Center Build-out: To keep up with AI demand, companies are investing trillions in data center infrastructure. By 2030, data centers are projected to require $5.2 trillion in capital expenditures worldwide to meet AI demand alone. The largest share of this investment, about $3.1 trillion, will go to technology developers for chips and computing hardware.

- Operational Costs: Even after a model is trained, the cost to run it is substantial. It’s estimated that a single ChatGPT query costs around 36 cents to run, with OpenAI spending approximately $700,000 per day to keep the model operational.

The Price of Talent

AI is a human-led endeavor, and the specialized talent required commands a premium. The average annual salary for an AI researcher in the U.S. can range from $130,000 to over $173,000, with top-tier professionals commanding even higher compensation. A machine learning engineer typically earns between $120,000 and $160,000 annually. The cost of R&D staff, which includes these highly paid researchers and engineers, can account for a significant portion of the total project cost — ranging from 29% to 49% of the final price tag for building a new AI model.

Data and Maintenance Overhead

An AI model is a living system that requires constant care and feeding.

The collection, cleaning, and preparation of high-quality data can account for 15% to 25% of the total project cost. For a complex project, creating a high-quality training dataset can cost between $10,000 and $90,000, depending on the complexity of the data and annotation. After deployment, AI models need continuous monitoring, updating, and fine-tuning. This includes costs for cloud hosting, data storage, and the salaries of the team maintaining the system. As models interact with more users and data, these maintenance costs rise accordingly.

These figures illustrate that AI is not a cheap or simple investment. It requires a long-term commitment to significant financial outlays in infrastructure, talent, and ongoing operations. Before committing, executives must ensure the potential value outweighs these steep, and often hidden, costs.

A Review of Karen Hao’s “Empire of AI”

For a deeper insights into these issues, I highly recommend Karen Hao’s book, “Empire of AI: Dreams and Nightmares in Sam Altman’s OpenAI.” As an award-winning journalist with years of intimate access to the AI industry, Hao offers a powerful and eye-opening account that goes far beyond the headlines.

Hao’s central thesis is that the AI industry, spearheaded by companies like OpenAI, operates like a modern colonial empire. Just as historical empires extracted natural resources and cheap labor from their colonies, the AI industry extracts massive amounts of energy, water, and data from around the globe to fuel its growth.

The book is an unflinching look at:

- The Environmental Cost: Hao reveals the staggering energy consumption of data centers, which are often built in developing nations to access cheap power and water, often at the expense of local communities.

- The Hidden Labor: She exposes the exploitative labor practices behind the scenes, where low-paid workers in countries like Kenya and Venezuela perform the critical, often psychologically traumatic, work of data labeling and content moderation for models like ChatGPT.

- The Pursuit of Power: The book chronicles OpenAI’s transformation from a research-focused nonprofit to a profit-driven corporation, highlighting the ideological conflicts and the unchecked ambition that prioritizes growth and dominance over ethical considerations.

“Empire of AI” is more than just a history of OpenAI; it’s a call to action. It forces us to confront the reality that the “AI revolution” isn’t a magical, clean process but a resource-intensive, often-exploitative enterprise. By understanding the “other side” of AI, executives can move beyond the hype and make more responsible, strategic decisions that consider the true, long-term impact of their investments.

A Smarter Path Forward - Investing in “Wise AI”

The solution is not to stop investing in AI but to invest in it more wisely. Instead of chasing the latest large-scale, general-purpose models, executives can adopt a more strategic and ethical approach that prioritizes efficiency and tangible value.

1. Small Language Models (SLMs) over Large Models

For many business applications, a massive, general-purpose LLM is like using a freight train to deliver a single package — it’s overkill. SLMs are a powerful, more sustainable alternative.

- Cost-Effective: SLMs can be 10 to 30 times more affordable to train and run than LLMs, drastically reducing both financial and environmental costs.

- Speed and Efficiency: Their smaller size allows them to run directly on local devices or in a private cloud environment, enabling faster response times and eliminating latency issues.

- Privacy-First: Since they can operate without sending data to a third-party cloud, SLMs are ideal for handling sensitive and proprietary information, offering a significant advantage for industries like finance and healthcare.

Instead of deploying a single, all-encompassing LLM, consider a modular approach where a series of fine-tuned SLMs handle specific tasks, such as customer service chatbots, internal data summarization, or code completion.

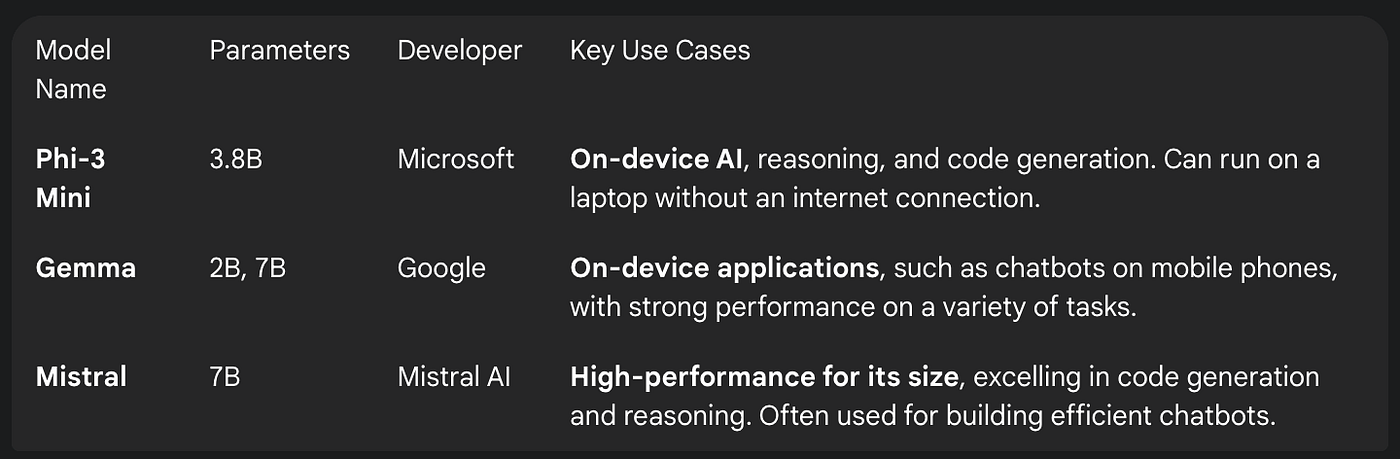

These are just a few of the many open-source SLMs available on platforms like Hugging Face, all of which are designed for efficiency and customization.

- Customer Service & Internal Knowledge Bases: Imagine a customer service chatbot that lives entirely on your company’s servers or even on a local device. Instead of paying for every API call to a cloud-based LLM, a fine-tuned SLM like Mistral 7B can handle a high volume of customer queries or summarize internal documents, all while keeping sensitive data securely on-premises.

- Legal & Medical Document Summarization: For fields with sensitive, domain-specific language, an open-source model like T5-Small can be fine-tuned on legal contracts or medical records to summarize key clauses or extract critical information. This reduces the risk of data breaches and is far more cost-effective than using a general-purpose LLM for a highly specialized task.

- Edge Devices and Industrial Automation: SLMs are ideal for scenarios where low latency is critical. In a factory setting, a small model could be embedded in a machine to process natural language commands, diagnose issues, or analyze sensor data in real-time, without relying on a slow, costly cloud connection.

By leveraging these smaller, more focused models, your organization can achieve targeted AI solutions with an 80% lower cost, faster performance, and a dramatically smaller environmental footprint. This is not just a technological choice — it’s a strategic decision for a more responsible and efficient future.

2. Valuing and Benchmarking AI for ROI

In the past, the valuation of AI projects was often based on “hype” and future potential. It’s time to bring a more rigorous, data-driven approach to AI investment.

- Define ROI Metrics Upfront: Before you invest a single dollar, define what success looks like. Is it increased revenue, reduced operational costs, or improved customer satisfaction? Don’t just track “AI adoption”; track its measurable impact on your business KPIs.

- Establish Baselines: Use a baseline of your current performance to measure the impact of the AI. For example, if you’re using AI for customer service, measure the average time to resolve a ticket before and after implementation.

- Audit for Efficiency: Continuously audit your AI systems to ensure they are performing as expected and are not consuming unnecessary resources. Look for opportunities to optimize models for lower energy and computational costs.

3. Ethical AI as a Strategic Initiative

Moving forward, ethical considerations are not just a “nice-to-have”; they are a critical risk management and reputational exercise.

- Accountability and Governance: Establish clear frameworks for who is responsible for the outputs of your AI systems. This includes creating a culture of accountability where potential biases in data and outcomes are regularly reviewed and addressed.

- Transparency: Understand where your AI models get their data from and how their decisions are made. A transparent AI system is not only more trustworthy but also easier to debug and improve.

By adopting this “Wise AI” approach, executives can move past the hype and create lasting value for their organizations while also fulfilling their responsibility to their stakeholders, the environment, and society as a whole.

4. Support Independent, Community-Rooted Research

While major tech companies dominate the AI headlines, some of the most critical work is happening outside the corporate ecosystem. Independent research institutes are leading the charge on ethical, community-focused AI, and executives should be aware of and even support their work.

A prime example is the Distributed AI Research (DAIR) Institute, founded by Dr. Timnit Gebru. DAIR’s mission is to counter the pervasive influence of “Big Tech” by creating a space for AI research that is not driven by profit motives.

DAIR’s work is a case study in what “Wise AI” can look like:

- Human-Centered Design: Instead of chasing ever-larger models, DAIR focuses on AI that solves specific, tangible problems for communities, such as using computer vision to analyze the effects of historical spatial apartheid in South Africa.

- Ethical Labor Practices: They highlight the hidden labor of data workers and advocate for fair compensation and ethical treatment, which directly links to the long-term financial and reputational costs of a business.

- Prioritizing Safety over Speed: The institute’s research, including seminal papers on the dangers of large language models, serves as a crucial warning system, helping businesses navigate the hidden risks of unvetted AI.

By understanding and supporting organizations like DAIR, executives can move beyond the hype and ensure their AI strategies are built on a foundation of ethical principles and long-term sustainability. It is a strategic move that not only mitigates risk but also contributes to a more responsible and equitable technological future.

The Empire of AI is not a foregone conclusion. It is a choice. By asking the right questions, insisting on transparency, and leading with a ‘Wise AI’ strategy, executives have the power to build an AI future that is not only profitable but also sustainable and equitable. The next wave of AI leaders will be those who see not just the promise, but the entire picture — the other side of AI.